BIT-101

Bill Gates touched my MacBook Pro

I know, I just did a post entitled Genuary Wrap. So what’s this all about?

Well, mostly, the wrap was just about my last six entries. Every week I did a post showing the previous six or seven entries, so I just wanted to wrap up that series.

But Genuary 2024 was a pretty intense experience for me, and I wanted to say a few words about it as a whole. And there is one piece I wanted to do a deeper dive into. I could have actually stretched this into two more posts, but I need to move on.

As I just said, this was pretty intense. Thirty one prompts. Some were easy. Some were hard. Some were super interesting and I was excited to do them. Some I really didn’t want to go near. But it was good to get outside of my comfort zone.

Some of the prompts I liked the least were “do something in this particular style” or “… in the style of this particular artist.” It felt a bit too art-schooly. But OK, I did them and got something out of each one.

I do like the fact that the rules are so loose. “You don’t have to follow the prompt exactly. Or even at all. But, y’know, we put effort into this.” There were definitely days where I stretched the bounds of the prompt almost beyond recognition. I just took things where they led me.

Here are some of the things I learned, discovered, re-learned, re-discovered, took new deep dives into, etc.:

This was my favorite piece of the month, and probably the one I spent the most time on. There’s a lot going on here, and a lot that went into it, so I wanted to deconstruct it a little.

The prompt is “skeuomophism”. Here’s a definition:

A skeuomorph … is a derivative object that retains ornamental design cues (attributes) from structures that were necessary in the original.[3] Skeuomorphs are typically used to make something new feel familiar in an effort to speed understanding and acclimation. They employ elements that, while essential to the original object, serve no pragmatic purpose in the new system.

From https://en.wikipedia.org/wiki/Skeuomorph

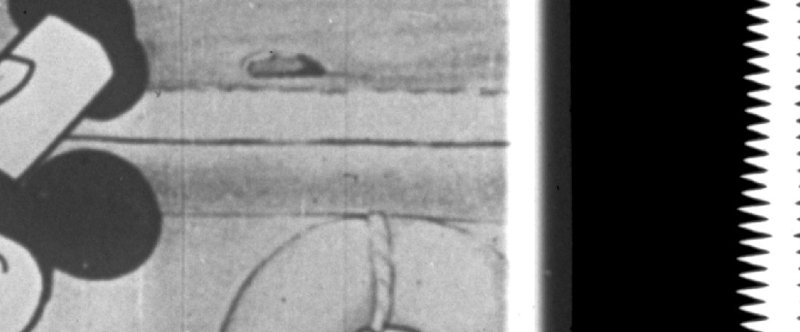

I wasn’t sure what I wanted to do for this. At some point I ran across this image:

From https://hackaday.com/2024/01/24/steamboat-willie-never-sounded-better/

This is a frame from Steamboat Willie, which recently went into the public domain, so is seeing a lot of use. I was interested in the jagged shape on the right edge. It’s the soundtrack, optically encoded into the film.

I’d thought of doing stuff like that before - encoding audio in a bitmap frame. Currently, I don’t have a way to analyze or play back audio in my Go-based workflow. There are libraries for that, but I didn’t want to go that deep for this piece. But I thought, what else can I encode in a bitmap? How about another bitmap??? Crazy, right?

The skeuomophism became replicating reading an image off of a tape drive. Rather than encoding the image as spots of magnetic charges, I wanted to try encoding the bits in pixels. Insane, but worth trying.

First I built an encoder. It takes a 100x100 pixel, grayscale image and loops through each pixel. Since it’s grayscale, I can get each pixel as a 256 level value. One byte. Eight bits. Each bit is a black or white pixel. Each byte goes into one column of a 10,000 by 8 pixel image.

Here’s a 800-pixel wide crop of that image:

And here it is zoomed in 10x:

You can see each column of the image is made up of eight pixels that are either black or white and represent one bit of that 8-bit byte.

With that in place, came the decoding.

First, I loaded the encoded data - the virtual “tape” containing the encoded bits of the image. I wanted to display it as it was reading, so I again scaled it by 10x. But it came out looking like this:

Not great. It’s got to do with the filter used in scaling. The default one in the Cairographics library interpolates, which usually results in a better result. But zooming in on pixels, you need to use a different filter. Unfortunately, my library had not implemented the different scaling filters. So I had to build out the C library wrappers for those. Wasn’t a big deal and now I have them there if I need them in the future.

Now I just go through the encoded bitmap, column by column. I sample each pixel in each column, turn them into bits, then into a single byte, set the color value based on that byte, and draw a single pixel.

I visually scrolled the encoded bitmap, but that’s just for show - so you can see which column is currently being read.

The final movie runs at 60fps, and it’s processing one pixel per frame, so it’s pretty damn slow. Just like reading data off of a tape drive.

So, like a true skeuomorph, this whole process serves no pragmatic purpose whatsoever. It’s literally encoding a bitmap into another bitmap that is eight times as large. Eight times larger in dimensions, though only 1k bigger in disk size. But it was a fun project and offered several cool challenges and people seemed to like it a lot on mastodon.

Comments? Best way to shout at me is on Mastodon ![]()