More ffmpeg tips

Palettes

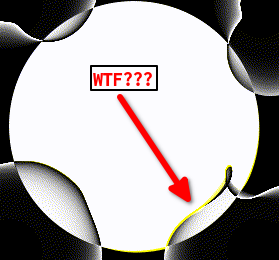

In a recent post, I shared making animated gifs with ffmpeg. I hadn’t been doing it very long so I wasn’t sure how it would work in the long run. Lo and behold, I ran into a problem. I was making black and white (actually monochrome grayscale) gifs and for the most part it was going well. But then I saw some yellow getting in there!

None of the still images had anything but grayscale values. So how was I getting yellow? To be honest, I’m not sure of the details 100%, but it’s got to do with palettes. Gifs generally have just 256 available colors. There are tricks to make animated gifs use more than that, but let’s stick with the basic case. 256. The frames you create for your animations will likely be pngs, which mean they can have millions of colors. Somehow, ffmpeg needs to take all those millions of colors and choose just 256 for the gif.

Last time, I posted this command:

ffmpeg -framerate 30 -i frames/frame_%04d.png out.gif

This was working pretty well for my grayscale gifs, but if you tried using it for full color animations, there’s a good chance you ended up with a mess. Because you basically got a random palette. I don’t know how the palette is chosen in that case, but there’s a damn good chance it’s not going to be right. So you need to create a decent palette. That’s done with a palettegen filter. First you run ffmpeg to generate a new 16×16 pixel image (256 pixels) that contains the palette it thinks it should use. That looks like this:

ffmpeg -i frames/frame_%04d.png -vf palettegen palette.png

I’ll keep the same flow of going through each parameter:

-i frames/frame_%04d.png – chooses the frames that contain the palette data.

-vf palettegen – a video filter. The filter is palettegen which generates a palette.

palette.png – the output image holding the palette.

The result, as I said, is just a 16×16 png image. You can open it up and see your palette. This has been done by analyzing all the images in the sequence and determining which 256 colors can be used across the entire animation to make things look decent.

Now you use this palette image in another call to ffmpeg:

ffmpeg -framerate 30 -i frames/frame_%04d.png -i palette.png -filter_complex paletteuse out.gif

Once again, I’ll break it down.

-framerate 30 – the fps just like before.

-i frames/frame_%04d.png – the input frames, just like before.

-i palette.png – yet another input, this time, the palette image.

-filter_complex paletteuse – this is where the magic happens.

out.gif – the output image, just like before.

So the filter_complex one is pretty complex, especially if you try to look up the documentation or examples. You’ll find examples like this (IGNORE THESE!):

ffmpeg -i input.mp4 -filter_complex "[0:v]scale=iw:2*trunc(iw*16/18), \

boxblur=luma_radius=min(h\,w)/20:luma_power=1:chroma_radius=min(cw\,ch)/20: \

chroma_power=1[bg];[bg][0:v]overlay=(W-w)/2:(H-h)/2,setsar=1" output.mp4

or…

ffmpeg -i bg.mp4 -i video1.mp4 -i video2.mp4 -filter_complex \

"[0:v][1:v]setpts=PTS-STARTPTS,overlay=20:40[bg]; \

[bg][2:v]setpts=PTS-STARTPTS,overlay=(W-w)/2:(H-h)/2[v]; \

[1:a][2:a]amerge=inputs=2[a]" \

-map "[v]" -map "[a]" -ac 2 output.mp4

You’ll generally find these in Stackoverflow, with instructions like, “Just do this…” and no explanations at all on why you should JUST do that.

If you’re lucky, you’ll at least find something like…

-filter_complex "fps=24,scale=${SIZE}:-1:flags=lanczos[x];[x][1:v]paletteuse"

I stripped away everything but the filter_complex part there. This one actually came from a good friend, Kenny Bunch, who saw my last article and happened to be digging in to the exact same stuff at the same time, but with full color animations.

This one was still more complex than I needed, but it was the simplest example I found, so I was very thankful.

So back to basics. filter_complex is a way of defining, a… well, a complex filter. You define the filter in a string and can chain together multiple actions to do all kinds of fancy things. The way it works is a series of filter actions that result in an output, and then you can use that output in further actions. Like this, broken down per action:

do first filter action[a]; does the first filter action and stores the output in a variable a.

[a] do another filter action[b]; feeds the output a into the next action, and saves that as b.

[b] yet another action[x]; you get the idea.

So in the above example:

fps=24,scale=${SIZE}:-1:flags=lanczos[x]; Sets the fps to 24, scales the gif to a given size on x and keeps the aspect ratio on y (the -1 param), uses Lanczos resampling to do the scaling. Stores the result in x.

[x][1:v]paletteuse Takes the data in x and uses input 1 (the palette image) as a palette using the paletteuse filter. In short, uses the palette image as the palette for the animation.

In my case, I’d already set the framerate. And I wasn’t scaling anything. so I could get rid of that whole first action. And apparently, ffmpeg was smart enough to figure out that input 0 was the frames and input 1 was the palette, So I could shorten the entire thing down to :

-filter_complex paletteuse

Magical.

Using these two commands, I was able to get a correctly paletted animation, with no yellow:

I still don’t know exactly why I was getting yellow, since all the source frames were completely grayscale. I guess it was just the complexity of calculating the values of all the channels of all the pixels from all the frames. Somewhere along the line, it wound up with a bit less in the blue channel for some reason. And once it started, it just multiplied.

Anyway, couldn’t help thinking of this…

Speed and Size

Other considerations when deciding whether to use ffmpeg or ImageMagick are rendering speed and file size.

ffmpeg will create gifs way faster than ImageMagick. A quick test:

Input: 300 frames, 500×500 pixels each.

ImageMagick: 29.985 seconds

ffmpeg: 4.301 seconds

And the ffmpeg test included generating the palette as well as the animation.

File size is not so good a story though. Same animation:

ImageMagick: 13 Mb

ffmpeg: 27 Mb

Other examples weren’t quite that bad, but ImageMagick always wins handily in the size category.

Of course, the other thing I mentioned last time was that ImageMagic will consistently use so much memory that the process just crashes and fails, whereas ffmpeg never has a problem in that area.

Just Say Yes

One last trick if you’re scripting these commands and you’re constantly having your script stop and ask you if you want to override your previous output gif with a new animation. Just add the -y parameter into your command and it won’t bother you anymore.

Comments? Best way to shout at me is on Mastodon ![]()